How to peacefully grow your service

In the last almost two decades, I have seen numerous Internet services ranging from small services for niche markets to Tier 1 services for major Internet companies. In this period, apart from database or application design, the technical success of a growing product benefited significantly from adopting a few characteristics. In this article, I’ll capture a few to highlight what helped us.

Feature boundaries

Small products often start with a single team, single code base, and a single database/storage layer. In the early days, this is essential for the team to make quick adjustments and pivots. Most of the time, early stage services are one or few processes. This allows quick iteration. At some point, your product grows to hundreds of active developers. At this moment, teams may begin to block each other because they have different development velocities, an ever-growing number of building and testing steps, different requirements on how frequently they want to push to production, different levels of prod push success rate, and different levels of availability promises. It would be a failure to adjust the rate of entire development to the slowest team. Instead, teams should be independently building and being able to release without the fear or breaking or blocking others. This is where feature boundaries come into play. Feature boundaries can be designed by either API boundaries or domain layers within boundaries of the same process. Both approaches defines a contract between teams and encapsulate the implementation details. As soon as your API or domain layer keep serving as a contract, you have a path to revise the implementation without any major disruptions to other teams. Achieving continuous delivery without process boundaries/microservices is hard, that’s why most companies end up breaking down their features into new services to be able to operate their lifecycle separately. Feature boundaries are hard to plan for in the early stages, don’t overdo them.

Data autonomy

Feature boundaries are an essential step to achieve another important milestone, data autonomy. As features grow, teams will likely to recognize that their early choices were not optimal. They will discover new access patterns or customer behavior, or will quickly recognize the initial decisions were not economical from an operability or scalability perspective. At this point, teams would immensely benefit from being able to redesign their databases or storage layer. When data operations are encapsulate behind an API or a domain layer, teams occasionally have a path to make changes without breaking changes or significant regressions. Significant changes may require complete rewrites and may include switching from one database to the other. These changes will require deprecating existing services or domain layers, and migrate users by performing an online migration. API boundaries and domain layers help identifying the extend of your feature set. Strong contracts are a way to document your capabilities, and will assist you when you need to revisit your implementation.

Data autonomy means the consumers of your data shouldn’t have direct database access to your database, shouldn’t be able to run JOINs between their tables and yours, shouldn’t be able to introduce new data operation without your approval, and doesn’t need to know about the details of your storage layer to be a consumer. It’s almost impossible to take away these permissive characteristics once the ship has sailed.

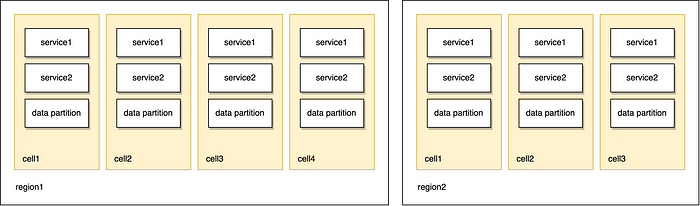

Cell-based architecture

A major factor why large Internet companies scale is the cell-based architecture. Cell-based architecture allow to horizontally scale services with reasonable blast radius characteristics. Cell-based architecture is a way to structure your workers and other resources in an often strongly isolated cell that is provisioned by automation. Cells can have different shapes but most commonly they contain a horizontal slice of the product. A cell can serve its own data partition or rely on a regional/global database. Cells with data partitions can fulfill the horizontal sharding needs for databases. Cells that map to data partitions also give teams discipline not to introduce reads/writes that cross partition boundaries. These systems are easy to scale as soon as you have capacity to provision new cells and your external dependencies can handle your new load.

Cell-based architecture makes you invest in automation which makes it easier to build new cells and regions as you grow. Cell-based architectures can have tooling in place to migrate tenants among cells in case of a capacity concern.

Cell-based architectures can be used to provide redundancy and auto failover. A cell could be a replica of another cell, and the load balancer can fallback to the new primary if a cell goes away. Replicas are often run in different availability zones to reduce the impact of a zonal outage.

Cell-based architectures limit the blast radius in an outage or a security event. Combined with automated continuous delivery practices, it can quickly rollback changes without impacting all of your customers. See Automating safe, hands-off deployments for an example how Amazon uses cells to reduce the impact of bad pushes.

Service and database dependencies

In the early days of a company, it’s common not to pay attention to the growing list of service dependencies or database calls in the path of a user request. Feature set is small, few service dependencies exist, few data operations are available, and pages are not serving results of tens of database reads. At this point, companies often fail to recognize that the availability of their product is directly related to the availability characteristics of their dependencies. You’ll compromise availability as you are depending on new external dependencies and you cannot beat the availability of your dependencies if they are in your critical path. If an external dependency is available at 99.9% of the time and is in your critical path, the availability of your path is going to be lower. This is why downstream services like databases has to have more aggressive availability targets than your upstream data services or front end servers. The Calculus of Service Availability from Google SRE captures this topic more in depth.

If you need to rely on a dependency with a lower SLO while maintaining a higher SLO, you can move your calls outside of critical paths (e.g. running them in background jobs), fallback to a default/cheaper behavior, or gracefully degrade the experience.

Graceful degradation

Graceful degradation is one of the undervalued tools in the early stage of a service when transient errors still have a minor impact. Graceful degradation allow serving a page or even an API call by degrading the experience instead of failing it. Imagine a web page getting bloated with new features in its navigation bar and sidebars in years… Eventually, you may end up finding yourself at a point that it becomes too expensive to serve the page. Minimizing the number of external dependencies would be the best next steps but, you can try to degrade the experience as a short term remedy such as displaying an error message or hiding a section. Graceful degradation may confuse customers if not done correctly, choose how you do it wisely!

Unique identifiers

If I had a time machine, I’d go back in time and switch some of my identifiers to client-generated globally unique identifiers, GUIDs. The convenience of autoincremented identifiers are great, also their human readable characteristics… But once you grow outside of a single primary database, autoincremented IDs become show stoppers. If you adopt the cell-based architecture with self contained database partitions, it becomes hard to move tenants around. If you’ve exposed the identifiers as links to customers, it becomes impossible to break them. If you’ve represented them as integers in your statistically typed languages or Protobuf files, it becomes a pain to revisit them. The pain of having to do this switch retrospectively is so painful that I often wished we chose GUIDs from the early days for select identifiers.

Idempotency

When making RPC calls, transient failures such as bad networking may cause calls to be dropped or timed out. RPC calls might be retried and might be received more than once at the server. Idempotency make it safe for the receiver to handle duplicates of request without compromising the integrity of data. Designing idempotent systems are hard, but retrofitting existing systems to become more idempotent is even harder. Idempotency applies to eventing as much as it applies to RPCs. Designing events to be idempotent is a major early contribution.

SLOs and quotas

Services will have objectives to ensure you don’t degrade the customer experience. Defining them early in your design phase help you to test your design against a theoretical target. It also helps you to eliminate unnecessary dependencies, or dependencies with poor SLOs early in the design phase. SLOs help you to explain the limitations others should consider when they are relying on your service directly. SLOs may help you to evaluate your design at the prototyping phase.

Quotas or rate limits allow you to reject aggressive call patterns from upstream services. Introducing quotas at a later time, if they are not permissive, could be a breaking change. It’s fairly useful to define them early and set them in place.

Observability

There are two critical organizationally hard problems in observability: dimensions to collect and propagation standards.

Dimensions are arbitrary labels collected with telemetry. It allows engineers to narrow down telemetry to identify the blast radius during an outage. For example, being able to break down a service’s latency metrics by Kubernetes cluster name can allow you to see which clusters are affected. Dimensions can be used to see other relevant telemetry. Maybe, you’d like to view logs available for the affected clusters to troubleshoot. Dimensions, when consistently and automatically applied, will improve your incident response and troubleshooting capabilities. Dimensions can get out of the hand if their cardinality becomes too high for metrics. It’s critical to build fundamentals to be able to adjust dimensions.

Propagation standards, such as a trace context or how dimensions are propagated, are another topic where retrofits are costly and sometimes impossible. The earlier you align in terms of request headers and runtime context propagation, the lesser of a problem it will be.

—

The success of Internet services rely on a wide range criteria such as good database design, capacity planning, CI/CD capabilities, understanding the limitations of your service dependencies, and most importantly technical and product talent. In this article, I tried to capture some characteristics that are often undermined but will easily pay off. Some early stage decisions can save years of investments in the long run, and you can use those years building great products for your customers instead of fighting fires.